As Founding Product Designer in an engineer-led startup, I solved cognitive overload in high-stakes live journalism by transforming a fragmented process into a cohesive AI workspace for trilingual government summits.

AI

B2B + SAAS + START UP

FOUNDING PRODUCT DESIGNER

UX + STRATEGY

2023

CPII AI Meeting Assistant

Designing Real-Time AI Transcription for High-Stakes Multilingual Events

Adopted by Hong Kong’s largest broadcaster

with 3.4M+ listeners.

Featured • 2025 Policy Address

CPII Meeting Assistant enhanced the Hong Kong government’s 2025 Policy Address broadcast.

Workflow & Tool Fragmentation in

Live Journalism

PROBLEM + REQUIREMENTS

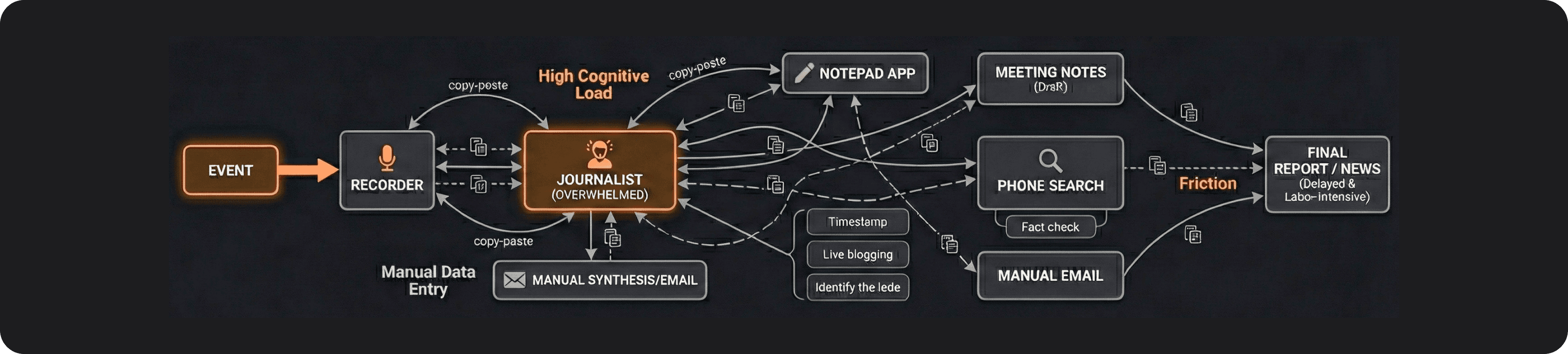

The core problems we set out to solve was workflow fragmentation: the fractured experience of journalists juggling recorders, notepads, and translation apps while trying to stay present in a fast-moving event. Stop to transcribe a quote, and you miss the next sentence. Switch to translate, and you lose your place entirely.

We wanted to create a space where capturing, understanding, and synthesizing could happen simultaneously, without breaking flow.

When I first joined, the product focused on providing real-time transcription and translation as live subtitles during events. However, AI latency and hardware constraints limited scalability. I helped pivot our strategy from generic subtitles to solving a critical workflow problem for journalists covering multilingual events.

Product Evolution

Early product: event subtitles

The product focused on live subtitles during events when I first joined the early project.

Post-event summary

Pivoted product direction to target journalists, introducing post-event summary.

The event workspace

Integrate capturing and synthesizing into a scalable and adaptive workspace.

AI Workspace for Live Journalism

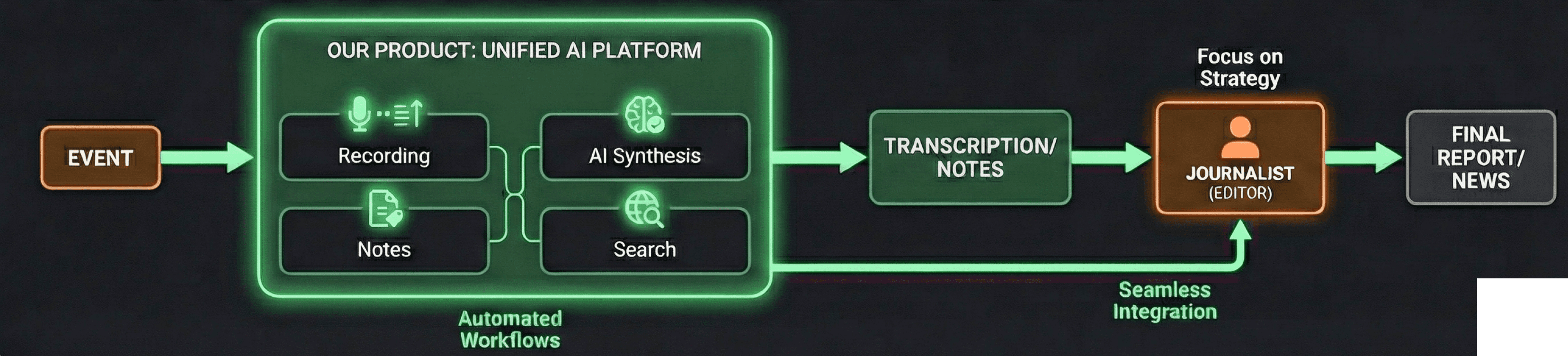

The CPII Meeting Assistant mirrors a journalist’s cognitive workflow, transforming chaotic live streams into structured intelligence through three stages: Augmented Input, Active Processing, Structured Output.

This linear "Input → Processing → Output" flow creates a stable anchor, making complex AI interactions intuitive in high-velocity environments.

SOLUTION

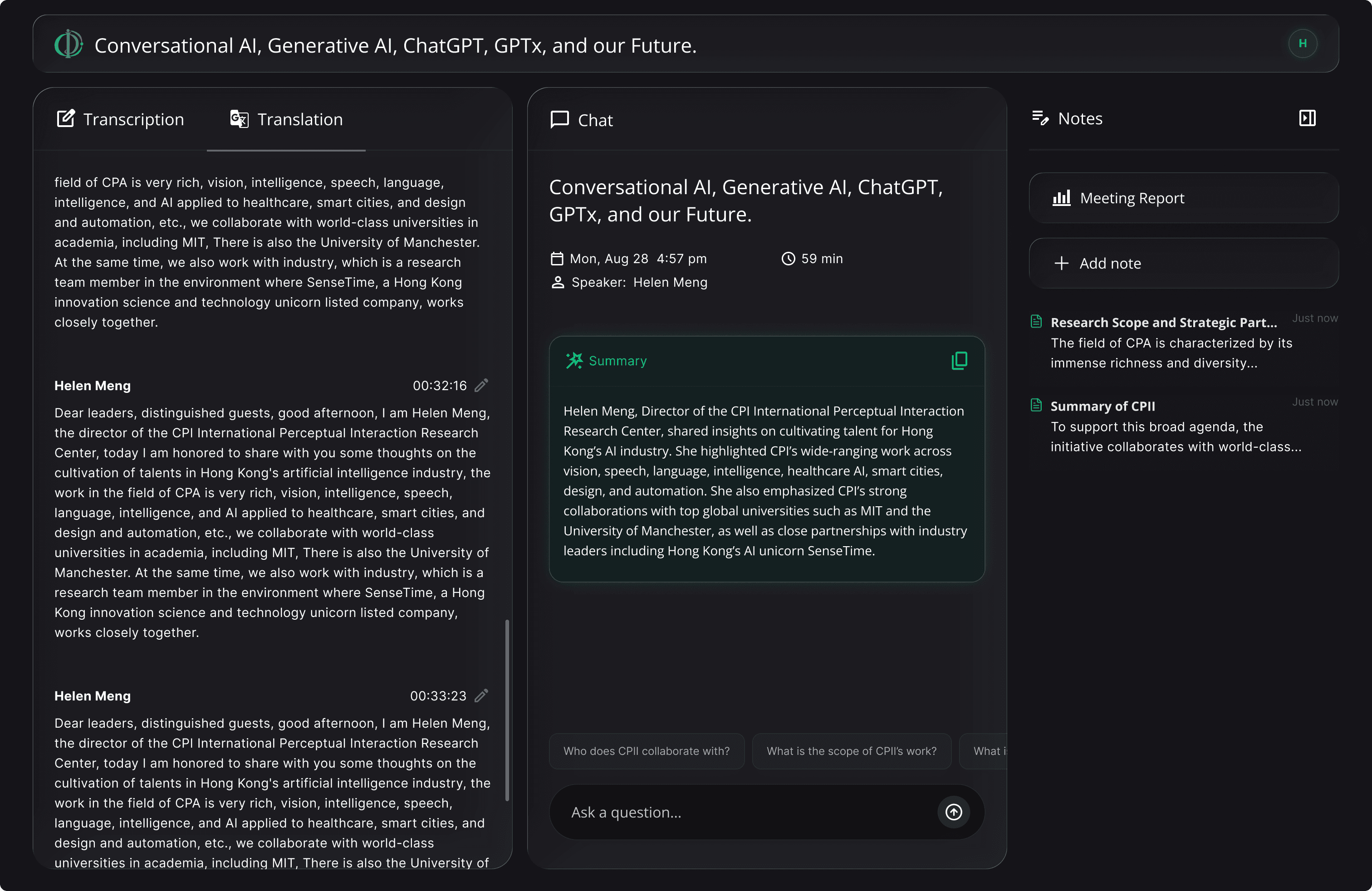

To eliminate the "tool -switching fatigue" of live reporting, I designed a Dynamic Panel System where consumption and verification coexist. The interface anchors the Live Feed as the persistent core, while the Intelligence Layer (Chat & Summary) scales fluidly alongside it. This allows journalists to interrogate data and fact-check in real-time without ever obscuring the context of the stage.

Standard

The default layout with a balanced view of capturing inputs and synthesizing.

Transcription & Translation

Optimized for concentrating on the event content with the real-time navigable text.

Input + Note

Ideal for synthesizing and documenting the real-time summary onto notes.

Dynamic Panel Structure • During Event

Dynamic Panel Structure • After Event

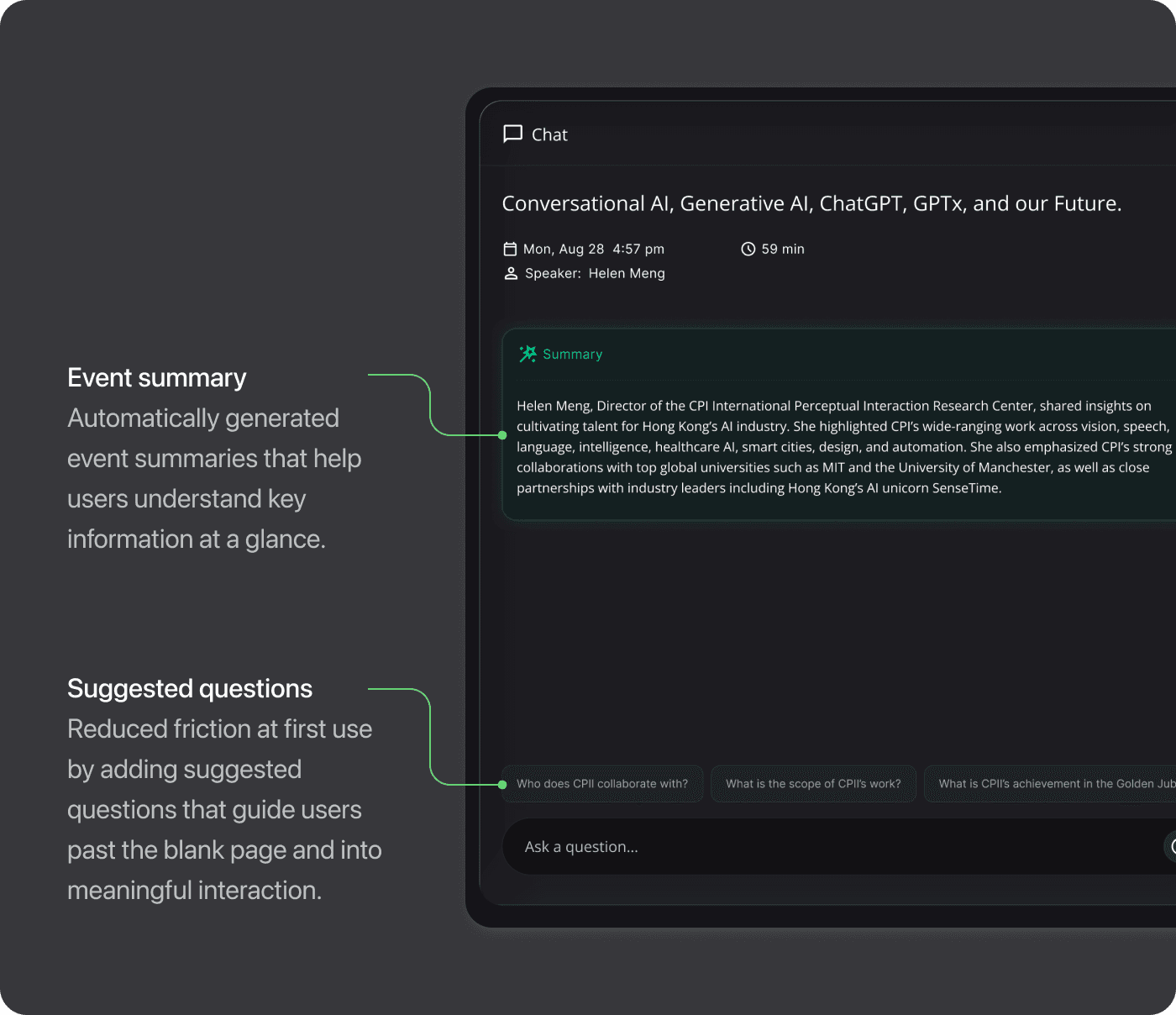

Post-event, the platform transitions from Capture to Analysis mode. In this state, the Bounded AI Chat becomes a verification engine for journalists to interrogate specific details, while the automated Meeting Report handles the heavy lifting of structural synthesis.

Standard

The default layout with a balanced view of inputs and synthesizing.

Processing through Chat

Optimized for referencing transcription and synthesizing the event content.

Notes & Meeting Report

Automated post-event synthesis via AI Meeting Reports, consolidating insights from the live transcription and chat.

Output Panel

The transformation space where raw event inputs become outputs. Users can take notes, organize insights, and package their synthesis into shareable work.

Chat Panel

The chat panel remains central to the post-meeting experience, while shrinking during live events to serve as a lightweight tool for quickly checking event content.

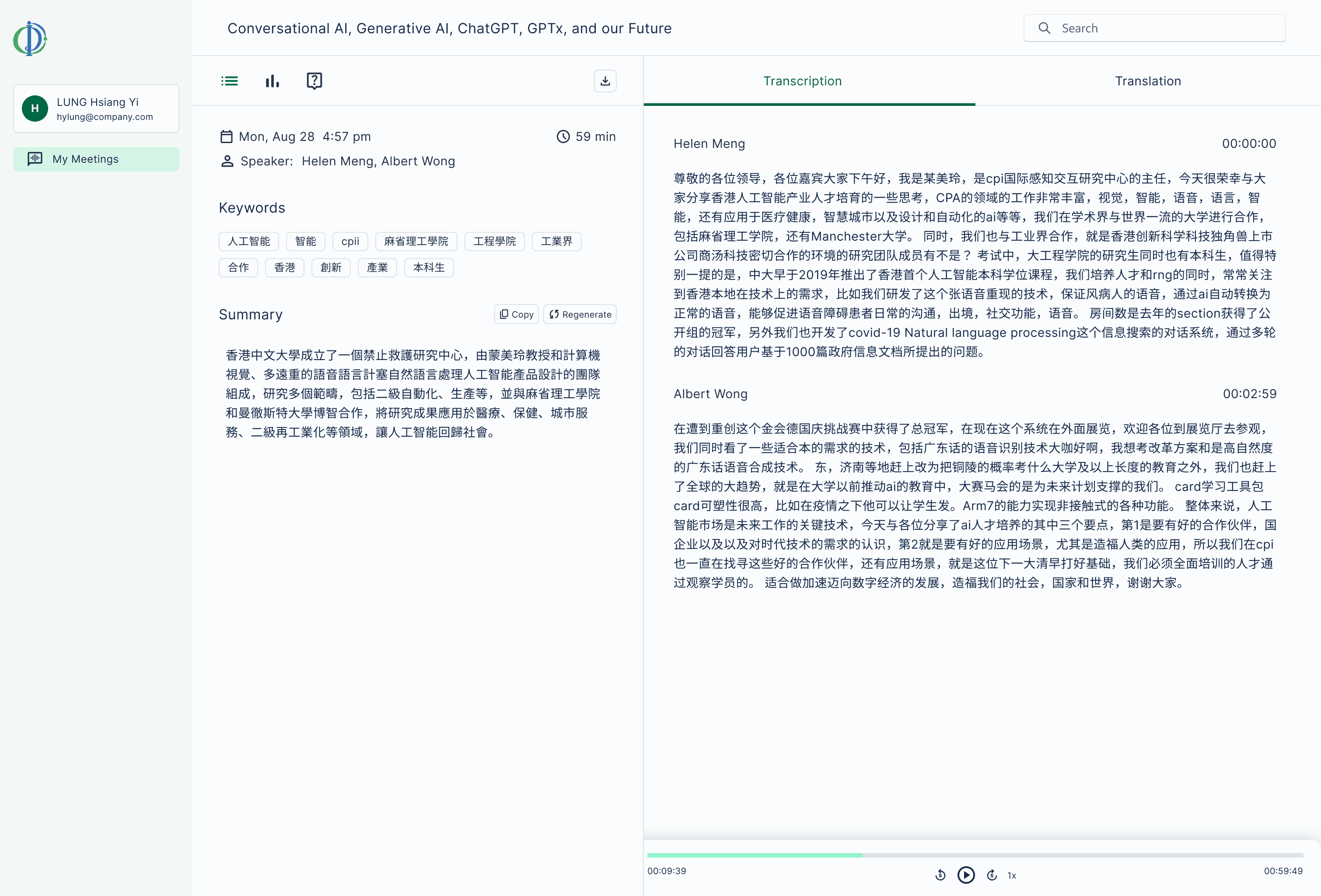

Input Panel

This is the real-time transcription and translation of the event that serves as the foundation of the user experience, providing the information source for both the chat panel and post-meeting report.

Before using AI Meeting Assistant: Cognitive burden & context switching

After using AI Meeting Assistant: Streamlined workflow

SOLUTION OVERVIEW

Trilingual Code-Mixed Transcription

A dual-stream interface that decodes Cantonese, English, and Mandarin simultaneously, displaying the original source alongside the translation for instant verification.

Real-time AI Summary

A dynamic AI summary that updates in real-time, condensing narrative blocks into bullet points so journalists can "catch up" without losing the live thread.

During Event: Note-taking and AI Chat

A dynamic panel system combining Note-taking and Bounded AI Chat, allowing users to verify facts and capture quotes without ever navigating away from the live stream.

Post Event: AI Synthesis

An instant post-event report that crystallizes hours of raw data into a structured editorial foundation, removing the manual burden of the first draft.

Iteration

By employing cognitive walkthroughs to analyze journalist workflows and conducting contextual inquiry within live event settings, I identified critical usability gaps. These insights drove the design iterations.

BEFORE

Restrictive Linear Navigation

Current "either/or" logic prevents users from viewing Summaries, Chats, and Reports simultaneously.

Inefficient Use of Real Estate

The layout prioritizes navigation between different events, yet user testing shows journalists rarely switch contexts this frequently.

Buried AI Value Proposition

Static event details dominate the visual hierarchy, obscuring the high-value AI insights that differentiate the product.

AFTER

Dynamic Cross-Referencing

Breaks linear constraints, enabling users to verify and compare insights across multiple active panels.

Distraction-Free Dark Mode

Dark mode reduces visual stimulation in low-light live events, ensuring the tool is unobtrusive to the users and their surroundings.

Future-Proof Layout

The panel-based design is built to grow, allowing for the easy integration of complex post-MVP features.

Rapid Prototyping via Lovable in Vibe Coding Workflow

Static mockups often fail to convey the nuance of LLM streaming behaviors. To solve this, in 2025, I used Lovable to build high-fidelity prototypes of the AI generation process. This allowed engineers to visualize the animation and behavior, resulting in a more efficient implementation cycle.

Simulated natural speech patterns by animating the transcription and translation at variable speeds.

Prototyped a split interface that generates the next paragraph of text while simultaneously streaming a summary of the previous section.

AI model for navigating the chaos of trilingual “code-mixing”

Hong Kong’s unique code-mixing of Cantonese, English, and Mandarin often breaks standard AI. The CPII Meeting Assistant is engineered for this complexity, delivering critical accuracy for high-stakes government summits and ceremonial events.

CONTEXT

Journalists & Broadcasters

Professionals covering high-stakes government policy addresses who need to capture precise quotes in real-time without errors.

The Public

Viewers of major ceremonial events (e.g., Government Policy Address) who struggle to follow complex, trilingual speeches in real-time.

RESEARCH

In-depth interviews

5

Contextual inquiries

2

Key findings

3

Distraction trap

Every time the journalists switched apps to check a fact, they stopped listening. They were missing the live story because their tools were scattered.

Replay nightmare

Journalists’ text notes and their audio recordings were completely separate. After the event, they had to waste hours scrubbing through the audio file just to find the quote they typed.

Trust gap

Journalists were terrified of publishing a wrong quote. They couldn't trust the English translation if they couldn't see the original Cantonese source to compare it against.